In real-world datasets, missing values are common — whether it’s medical records, loan applications, or user profiles. Handling them correctly is crucial for model performance and trustworthy insights.

In this blog, we’ll break down:

- What is Missingness?

- What is Simple Imputer?

- What is Missing Indicator?

- Why and when we use them in the real world

- How to implement step-by-step, ending with a production-ready pipeline

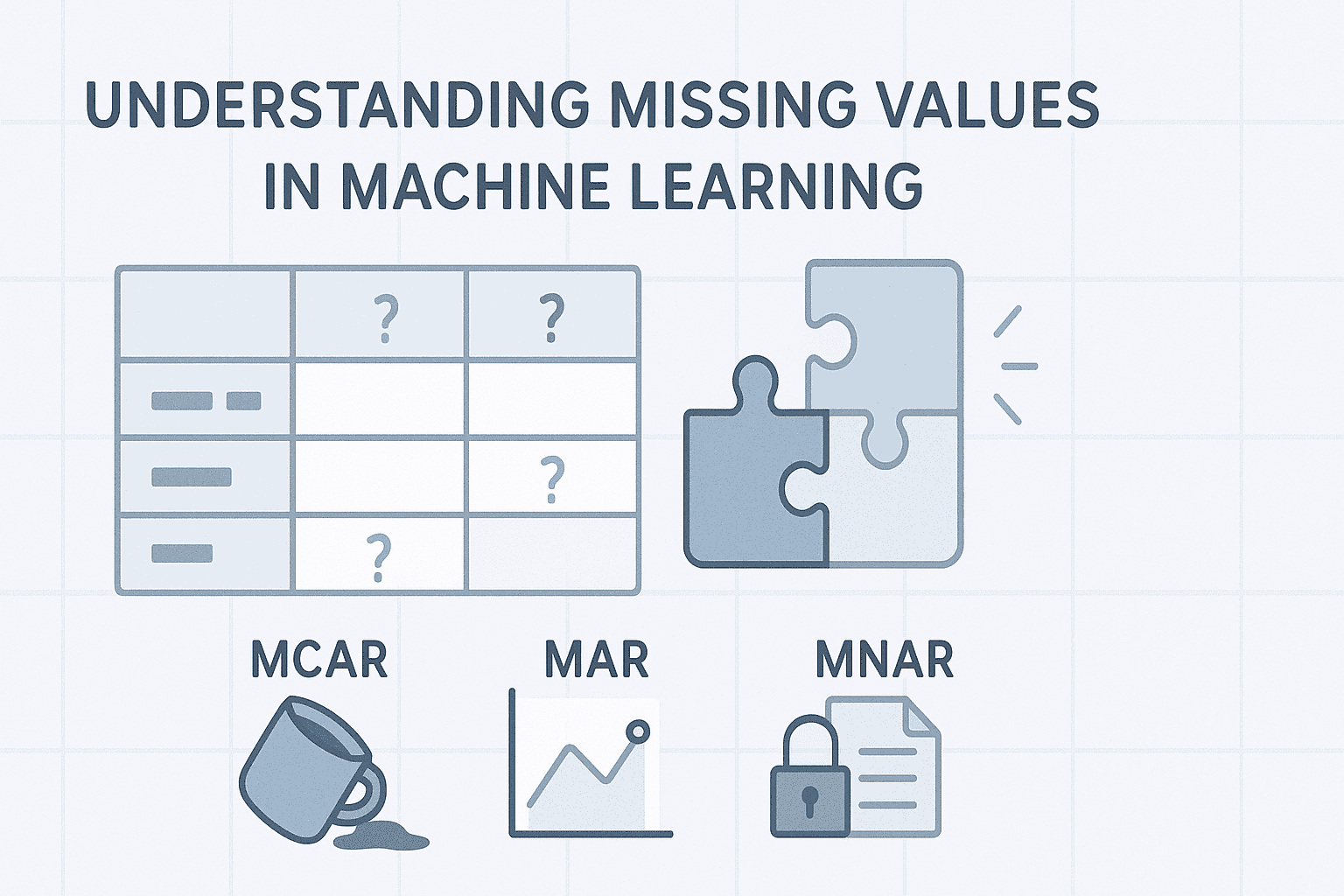

1. What is Missingness?

“Missingness” refers to the presence of missing values in a dataset and, more importantly, the reason or pattern behind those missing values.

It’s not just about the absence of a value — it’s about asking:

- Why is this value missing?

- Does the fact that it’s missing tell us something useful?

There are 3 types of missingness:

| Type | Description | Example |

|---|---|---|

| MCAR (Missing Completely At Random) | Missing has no pattern | A server crashed randomly |

| MAR (Missing At Random) | Missing depends on other variables | Young people more likely to skip income |

| MNAR (Missing Not At Random) | Missing depends on itself | Rich people don’t disclose their income |

In MAR and MNAR, the fact that a value is missing can carry information. This is where the Missing Indicator becomes powerful.

2. What is Simple Imputer?

SimpleImputer is a tool from scikit-learn used to fill missing values using a simple strategy:

- Mean: Average of column

- Median: Middle value

- Most Frequent: Most common value

- Constant: A fixed value like 0 or “Unknown”

Example:

import pandas as pd

import numpy as np

from sklearn.impute import SimpleImputer

data = pd.DataFrame({

'Age': [25, np.nan, 30, np.nan, 45],

'Income': [50000, 60000, np.nan, 65000, np.nan]

})

imputer = SimpleImputer(strategy='median')

filled_data = imputer.fit_transform(data)

pd.DataFrame(filled_data, columns=data.columns)

Output:

Age Income

0 25.0 50000.0

1 30.0 60000.0

2 30.0 60000.0

3 30.0 65000.0

4 45.0 60000.0💡 NaN values are replaced with the median of the column.

Why is Simple Imputer Important in Industry?

1. ML Models Can’t Handle NaNs

Most ML models (Linear Regression, Logistic Regression, Random Forest, etc.) don’t work if NaN is present. You must fill them.

2. Fast and Efficient

Simple strategies (mean/median) are fast and effective for most numeric features.

3. Preprocessing Pipelines

It integrates well into scikit-learn pipelines (used in production systems).

4. Keeps the Distribution Stable

Median is especially useful when data has outliers.

Where and When Do We Use Simple Imputer?

When to Use:

| Situation | Use |

|---|---|

| Numeric data | mean or median |

| Categorical data | most_frequent or constant |

| During preprocessing | Inside a scikit-learn Pipeline |

| When you want simplicity and speed | SimpleImputer is best |

Real-World Industry Use Cases

Loan Default Dataset

- Feature: NumberOfDependents

- Strategy: Fill missing with median (most realistic, avoids outliers)

Medical Dataset

- Feature: Blood Pressure

- Strategy: Fill with mean or median

Telecom (Churn) Dataset

- Feature: LastRechargeAmount

- Strategy: Fill with constant = 0 or median

E-commerce Dataset

- Feature: ProductRating

- Strategy: Fill with most_frequent or constant = “unknown”

Strategies Summary Table

| Strategy | Best For | Example Use Case |

|---|---|---|

| mean | Numeric, no outliers | Age, Salary in a clean dataset |

| median | Numeric, has outliers | Loan Amount, Medical costs |

| most_frequent | Categorical or repetitive | Gender, Country, Product Brand |

| constant | Fill all with a fixed value | “Unknown”, 0, etc. |

3. Why Imputation Alone Can Be Risky

Let’s say a customer’s income was missing and we filled it with the median. That’s good, but:

We lose the signal that the income was originally missing.

Maybe customers who hide their income are more likely to default on a loan?

To keep this information, we use a Missing Indicator.

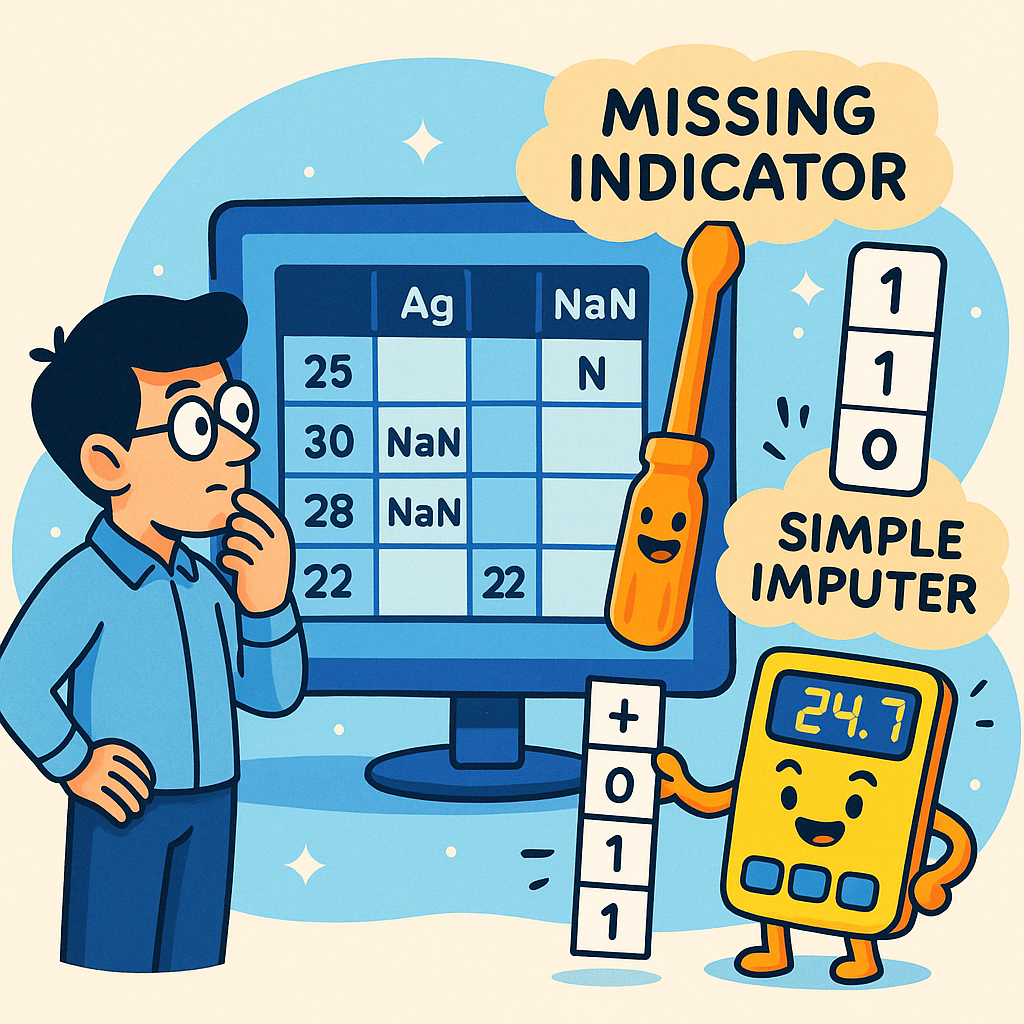

🔹 What is Missing Indicator?

A Missing Indicator (MI) is a binary feature (0 or 1) that tells whether a value was missing in the original dataset for a particular feature.

- 1 = Value was missing

- 0 = Value was present

It is not a method to fill the missing value, but a signal to the model that a value was originally missing.

🔹 Why Do We Use Missing Indicator? (Importance)

In many real-world datasets, missingness itself can carry information.

✅ For example:

- A high-income customer might refuse to disclose their salary — this “missing” value may actually indicate something about their behavior or category.

- In a medical dataset, a missing lab test might mean that the doctor didn’t find it necessary — which is a kind of signal!

Hence, instead of blindly imputing (e.g., filling with mean), we can add an extra “Missing Indicator” column, so the model knows what was imputed.

Where and When is Missing Indicator Used?

Missing Indicator is especially useful:

- In tree-based models (like Random Forest, XGBoost)

- When data is not missing completely at random

- When the fact that a value is missing is predictive

- In financial, healthcare, telecom, insurance, etc. industries

Real-Life Examples Where MI is Mandatory

1. Loan Default Dataset:

- Feature: NumberOfDependents

- Some people did not report their dependents.

- Missing values could signal:

- Hiding dependents → potential fraud

- Single individuals → possibly fewer expenses → less risky

So we:

- Impute missing values (e.g., with median)

- Add a Missing Indicator column: NumberofDependents_missing

2. Medical Dataset:

- Feature: Cholestrol_Level

- If missing, it might be:

- Patient already healthy

- Doctor didn’t request the test

So we:

- Impute with median

- Add Missing Indicator: Cholestrol_Level_missing

3. Telecom Dataset (Churn Prediction):

- Feature:

LastRechargeAmount - If missing, maybe the user is inactive for a long time

Missingness = sign of likely churn!

How to Implement on Real Dataset (Using Python)

Let’s go step-by-step using pandas and scikit-learn.

Sample Dataset (Simulated)

import pandas as pd

import numpy as np

# Simulate a small dataset

data = pd.DataFrame({

'Age': [25, 30, np.nan, 45, np.nan],

'Income': [50000, 60000, 65000, np.nan, 70000],

'Loan_Status': [1, 0, 1, 0, 1]

})Step 1: Add Missing Indicator Columns

pythonCopyEditfor col in ['Age', 'Income']:

data[col + '_missing'] = data[col].isnull().astype(int)

👉 Step 2: Impute the missing values (with median, for example)

for col in ['Age', 'Income']:

data[col].fillna(data[col].median(), inplace=True)

👉 Final Dataset

print(data)Output:

Age Income Loan_Status Age_missing Income_missing

0 25.00 50000.0 1 0 0

1 30.00 60000.0 0 0 0

2 32.50 65000.0 1 1 0

3 45.00 62500.0 0 0 1

4 32.50 70000.0 1 1 0

✅ Age_missing and Income_missing now tell the model that the original value was missing.

🔹 Using MissingIndicator from Scikit-learn

from sklearn.impute import SimpleImputer

from sklearn.pipeline import Pipeline

from sklearn.compose import ColumnTransformer

from sklearn.impute import MissingIndicator

# Define numeric columns

numeric_cols = ['Age', 'Income']

# Pipeline for numeric columns: impute + missing indicator

numeric_pipeline = Pipeline(steps=[

('imputer', SimpleImputer(strategy='median', add_indicator=True))

])

# Apply column transformer

transformer = ColumnTransformer(transformers=[

('num', numeric_pipeline, numeric_cols)

])

# Fit-transform

transformed_data = transformer.fit_transform(data[numeric_cols])

Clarifying the Confusion

SimpleImputer(add_indicator=True)

This is actually how scikit-learn recommends using the Missing Indicator!

SimpleImputerfills the missing values (e.g., with mean/median/etc.)- If you set

add_indicator=True, it automatically adds a missing indicator column for each feature that has missing values

So When Do You Use MissingIndicator Alone?

You use the MissingIndicator class separately only if:

- You don’t want to fill missing values, but just flag them.

- You already filled missing values in a different way, and now want to generate missing indicators separately.

✅ If You Want to Use MissingIndicator Separately

from sklearn.impute import MissingIndicator

indicator = MissingIndicator()

missing_flags = indicator.fit_transform(data)

# Get indicator column names

missing_cols = [col + '_missing' for col, is_missing in zip(data.columns, data.isnull().any()) if is_missing]

df_flags = pd.DataFrame(missing_flags, columns=missing_cols)

print(df_flags)This just creates binary indicators without imputing the data.

✅ Question 2: Which imputation method should we use?

There’s no one-size-fits-all, but here’s a guide:

| Imputation Method | When to Use |

|---|---|

SimpleImputer (mean/median) |

Fast, works well for numeric data if distribution is symmetric or slightly skewed |

KNNImputer |

Use when nearby samples (rows) are similar. Best for small/medium datasets |

IterativeImputer |

Best when you want a model-based estimation of missing values. Powerful but slower |

Most Frequent |

Categorical variables — fill with mode |

Important: No matter which imputer you use, if you believe “missingness” contains signal, add a missing indicator column too!

Question : Why should we add a new column like age_missing if we already filled the value?

Here’s the real logic:

💡 When we impute, we “guess” the missing value.

But if you just replace the missing value with median or mean, you hide the fact that it was missing — and that missingness might carry predictive power.

So, we add

age_missingto tell the model:

“Hey! This value was originally missing. We filled it, but you should know it was NA.”This gives the model two signals:

. The filled value (e.g., Age = 32.5)

. And the fact that it was originally missing (e.g.,

Age_missing = 1)

Want to Think Like a Pro?

- Use SimpleImputer + add_indicator=True for fast, scalable ML pipelines.

- Use IterativeImputer or KNNImputer + manually add missing indicator columns if you’re working with sensitive/predictive features (like Age, Income, Lab Results).

- Always test model performance with and without indicator columns → keep if model improves.

Final Implementation Using Pipeline

from sklearn.pipeline import Pipeline

from sklearn.impute import SimpleImputer

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.compose import ColumnTransformer

# Sample dataset

df = pd.DataFrame({

'Age': [25, 30, np.nan, 45, np.nan],

'Income': [50000, 60000, 65000, np.nan, 70000],

'Target': [0, 1, 0, 1, 0]

})

X = df[['Age', 'Income']]

y = df['Target']

# Create transformer: impute + indicator

imputer = SimpleImputer(strategy='median', add_indicator=True)

# Final pipeline with Random Forest

pipeline = Pipeline([

('imputer', imputer),

('model', RandomForestClassifier(random_state=42))

])

# Train/test split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Fit and predict

pipeline.fit(X_train, y_train)

preds = pipeline.predict(X_test)

# Accuracy

print("Test Accuracy:", accuracy_score(y_test, preds))Why Use a Pipeline?

When working on a real ML project, especially in production or with cross-validation, you want to ensure:

- Preprocessing happens inside the training folds

- Imputation rules are applied only from training data

- No data leakage

That’s where pipelines help. They chain preprocessing + modeling into one safe and reproducible unit.

🎯 Final Thoughts

- Missingness matters.

- Don’t just fill the value — understand why it’s missing.

- Simple Imputer ensures your model can run.

- Missing Indicator ensures your model can learn from the absence.

Together, they help you build more robust, realistic, and high-performing models.

If you found this blog helpful, feel free to share it with your fellow learners or save it in your Machine Learning notes.

📌 Remember: In data science, small details like missing indicators can lead to big gains in model performance!