Ultimate Guide to Feature Scaling in Machine Learning: Techniques, Examples, and Best Practices

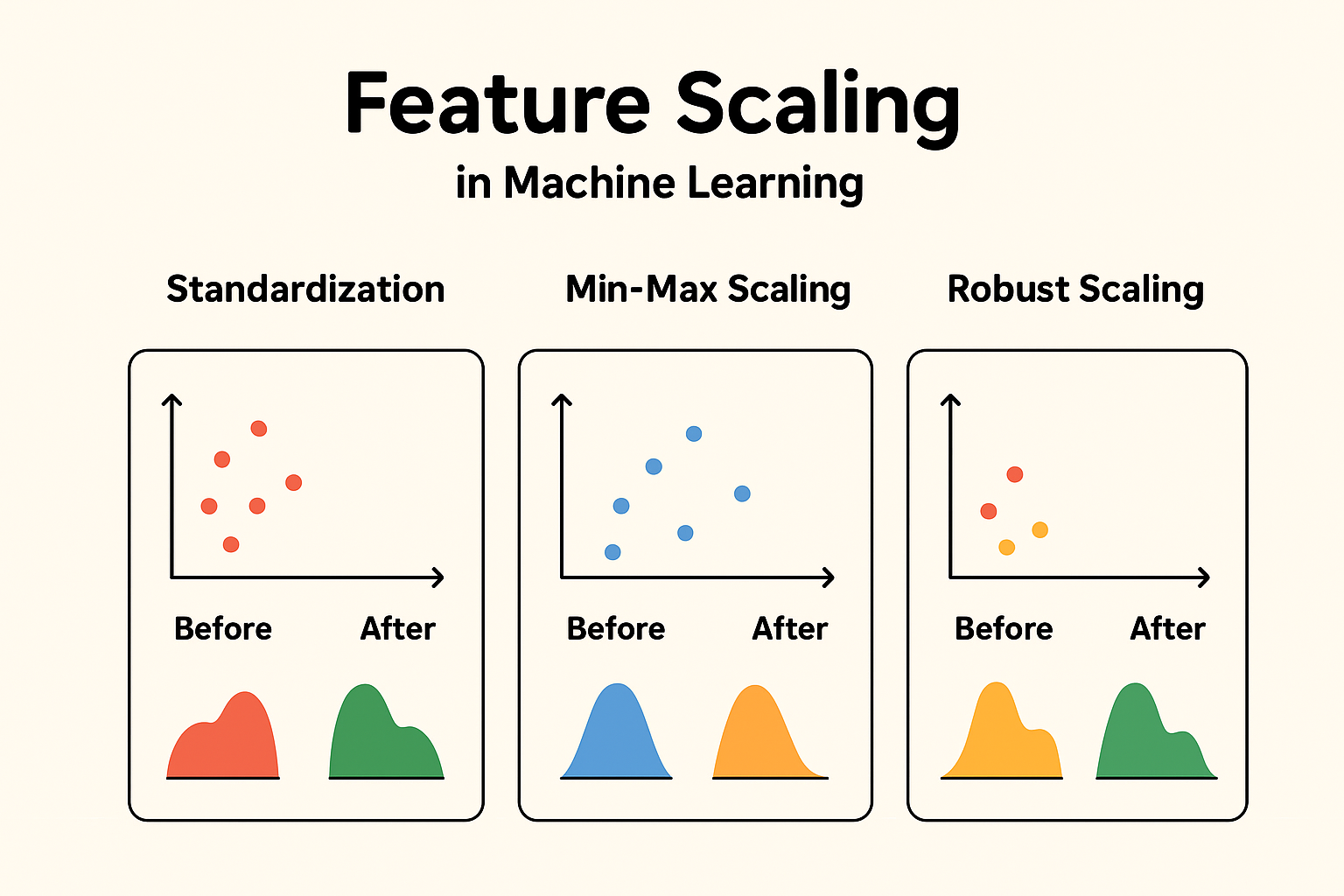

Feature scaling is a crucial preprocessing step in machine learning that ensures your models perform optimally. Whether you’re dealing with datasets where features have varying scales or units, understanding feature scaling can significantly boost model accuracy, convergence speed, and overall efficiency. In this comprehensive guide, we’ll explore what feature scaling is, why it’s essential, its impact on different models, and detailed breakdowns of popular methods like standardization and min-max scaling. We’ll also cover real-world examples, code snippets, and when to use (or avoid) scaling. This article is optimized for search engines (SEO) with targeted keywords like “feature scaling techniques,” “standardization vs min-max scaling,” and “impact of scaling on machine learning models.” For answer engines (AEO), we’ve structured content to directly answer common queries. And for AI optimization (AIO), the information is presented in a clear, structured format with tables, lists, and verifiable examples to facilitate easy parsing and understanding. What is Feature Scaling? Feature scaling, also known as data normalization or standardization, is a key technique in machine learning and data preprocessing. It transforms the values of numerical features in a dataset so they fall within a similar range or distribution. This prevents any single feature from dominating others due to differences in magnitudes or units. Common methods for feature scaling include: Example of Feature Scaling Consider a dataset with two features: “Age” (ranging from 20 to 60) and “Income” (ranging from $20,000 to $100,000). Without scaling: After Min-Max Scaling to [0, 1]: This makes the features comparable, as both now span the same range. Why is Feature Scaling Used? Feature scaling is primarily used to: In essence, it’s a preprocessing step to ensure fair contribution from all features during model training. Impact of Feature Scaling on Model Performance Scaling generally has a positive impact on model performance for algorithms sensitive to feature magnitudes. It can lead to: However, the impact varies by model type. For insensitive models (discussed later), scaling has negligible or no effect. Example: KNN Without Scaling Suppose you have: KNN calculates Euclidean distance:distance = sqrt{(Age_1 – Age_2)^2 + (Income_1 – Income_2)^2} Because income is much larger in magnitude, distance is dominated by income differences, ignoring the effect of age. After scaling both features to [0, 1], both features contribute equally to the distance metric, leading to better classification accuracy. Visual Representation: Here’s the visual proof: This is exactly why scaling is critical for distance-based models. Another Example: Linear Regression with Gradient Descent Let’s illustrate using a simple linear regression model trained with Gradient Descent on a dataset where features have vastly different scales. The target y = w1×f1+w2×f2+w0+ noise. f1 ranges from 0-1 and f2 from 0-1000. Setup: Results: This demonstrates scaling improves speed (5x faster convergence) and accuracy (lower error) by balancing the loss landscape. Impacts on the Model if Not Using Feature Scaling If scaling is not used: Example Impact In the same linear regression setup above, without scaling, the model’s predictions on test data had ~7x higher MSE (5.9 vs. 0.8 with scaling). For KNN on an iris-like dataset with unscaled features (petal length in cm vs. sepal width in mm), accuracy dropped from 95% to 70% because distance calculations were dominated by the larger unit. Models Not Impacted by Feature Scaling Some models are invariant to feature scaling because they don’t rely on magnitudes, distances, or gradients in the same way: Summary Table: Model Types and Scaling Impact Model Type Scaling Impact? Reason KNN, K-Means ✅ High Uses distance measures SVM (RBF/poly kernels) ✅ High Uses distances in kernel space Logistic / Linear Regression ✅ Medium Gradient descent & regularization Neural Networks ✅ Medium–High Gradient descent benefits from scaling PCA, LDA ✅ High Variance-based methods Decision Trees / RF / XGBoost ❌ Low Based on feature splits Naive Bayes ❌ Low Based on probability, not distance Does Feature Scaling Negatively Impact Performance? In general, scaling does not negatively impact the performance of machine learning algorithms when applied correctly. For most algorithms, scaling is either beneficial or neutral. Potential for Negative Impact Scaling has no direct negative impact on performance for these models. However, it could introduce: Does Feature Scaling Affect the Distribution of Data? When we say “distribution” in this context, we typically refer to the shape of the data distribution (e.g., whether it’s normal, skewed, uniform, etc.), as seen in a histogram or density plot. Standardization (or scaling in general) is a linear transformation that shifts and/or rescales the data but does not change its underlying distribution shape. When to Be Cautious While scaling preserves distribution shape, be cautious: Types of Feature Scaling Now, let’s dive deeper into specific types of feature scaling. What is Standardization? Standardization, also known as Z-score normalization, is a preprocessing technique that transforms numerical features in a dataset so that they have a mean of 0 and a standard deviation of 1. This centers the data around zero and scales it based on how spread out the values are, making the features follow a standard normal distribution (approximately Gaussian with mean 0 and variance 1). Formula:X’ = (X – μ ) / σwhere X is the original value, μ is the mean of the feature, σ (sigma) is the standard deviation of the feature, and X′ is the standardized value. This method doesn’t bound the values to a specific range (unlike Min-Max scaling), so it can produce negative values or values greater than 1. It’s particularly useful because it preserves the shape of the original distribution while making features comparable. Visual Representation: Here’s a clear step-by-step visual difference Original Data → Mean ≠ 0, Variance ≠ 1 When is Standardization Used? (With Examples) Standardization is used when: When Not to Use It: Examples: How is Standardization Used? (With Example and Step-by-Step) Standardization is typically implemented using libraries like scikit-learn in Python. Here’s a step-by-step guide with a simple numerical example: feature values [1, 4, 5, 11] (e.g., representing “Scores” in a test). Step-by-Step Process: In code (using scikit-learn ): Here’s how to standardize and verify