AI Race Heats Up: GPT-5.1, China’s Open-Source Surge, Google’s Innovations

The AI war just entered a new phase, and it’s nuclear. Over 50 major AI updates dropped in the last seven days, signaling a rapid acceleration in the global AI race. From OpenAI’s user-centric GPT-5.1 refinements and China’s groundbreaking open-source models, to Google’s ambitious space-bound AI and critical legal battles shaping online shopping, the pace of innovation is unprecedented. This week saw major shifts across the board, impacting how we interact with chatbots, use AI in commerce, and even license celebrity voices. – OpenAI’s GPT-5.1 offers “Instant” and “Thinking” modes, adaptive reasoning, and new personality options for natural, efficient AI interactions. – China’s Open-Source Prowess: Moonshot AI’s Kimi K2 Thinking and Baidu’s Ernie 5.0/4.5 challenge closed models, driving innovation and accessibility. – Google’s Diverse Innovations span AI-powered image doodling (MixBoard), enhanced conversational AI (Gemini Live), privacy (Private AI Compute), and Project Suncatcher for space-based AI. – Amazon vs. Perplexity: A landmark lawsuit is underway, set to redefine the future of AI shopping assistants and e-commerce. – Ethical Voice Cloning: 11 Labs’ Iconic Marketplace provides a legal platform for companies to license celebrity voices, ensuring consent and compensation. As AI continues its exponential growth, how will these breakthroughs redefine our work, daily lives, and even our perception of reality? #AIUpdates #TechInnovation #ArtificialIntelligence #FutureOfAI #DigitalTransformation

Humanoid Robotics: China’s Rapid Advance Amidst Global Shifts

The humanoid robotics space just experienced one of its most explosive weeks this year, revealing a split timeline of groundbreaking innovation and stark reality checks. While China showcased rapid advancements with robots seamlessly navigating home environments, tackling industrial tasks, and achieving mass deployment, other developments highlighted ongoing challenges. This past week underscored both the immense potential and the practical hurdles facing the industry. Here are key takeaways from this pivotal period: – Mindon’s Unite G1 demonstrated unprecedented environmental generalization, smoothly performing complex household tasks with human-like fluidity. – Unitree unveiled the G1D, a wheeled humanoid designed for industrial speed and repetitive tasks, supported by a comprehensive software platform for data generation and deployment. – UB Tech confirmed the mass shipment of hundreds of Walker S2 humanoids to industrial facilities, marking a significant milestone in large-scale commercial deployment, driven by innovations like its battery swapping system. – Globally, the industry faces critical questions regarding battery life, long-term reliability, safety around people, maintenance costs, and accessible price points for widespread adoption. Are we truly on the cusp of mainstream humanoid adoption, or does the recent chaos indicate fundamental challenges still to overcome? #HumanoidRobotics #AI #Robotics #Automation #TechInnovation

Mastering ChatGPT Study Mode for Accelerated Learning

Stop just getting answers from ChatGPT – start learning smarter and remembering more with its hidden Study Mode. Many of us use AI for quick information, but this transcript reveals a powerful feature in ChatGPT, “Study Mode,” designed to revolutionize how we learn. Far from a simple answer machine, Study Mode transforms ChatGPT into a personalized tutor, employing active learning techniques and Socratic questioning to deepen understanding and improve retention. It’s about building knowledge, not just consuming facts. Here are key strategies to maximize your learning with Study Mode: – Engage with AI questions: Actively participate in the dialogue to prime your brain for deeper understanding. – Break down complex topics: Leverage its ability to simplify and scaffold learning step-by-step. – Proactively quiz yourself: Utilize active recall for stronger memory retention by testing your knowledge. – Personalize your learning path: Tailor the AI’s teaching style to your background and preferred learning methods. – Leverage visual input: Upload diagrams and images for interactive, multimodal explanations and quizzes. – Embrace mistakes: Use Study Mode as a judgment-free space to learn from errors and refine your understanding. Ready to transform your learning journey? What strategy will you try first to unlock ChatGPT’s full potential? #ChatGPT #AIinEducation #LearningStrategies #ActiveLearning #StudyHacks

Jony Ive & Sam Altman Redefine Tech: A Screenless AI Future for Well-being

Is your phone making you anxious? The man who designed the iPhone, Jony Ive, admits our tech relationship is “uncomfortable.” Now, he’s teaming with OpenAI’s Sam Altman to build something radically different. This visionary collaboration aims to create a “third core device” alongside your phone and laptop: a screenless, palm-sized AI gadget designed to transform how we interact with technology. Unlike current devices, its primary goal is to improve emotional well-being, reducing anxiety and fostering genuine connection, rather than just boosting productivity. Here are key takeaways about this ambitious project: – Discover a screenless, palm-sized AI companion designed to understand your life and environment. – Experience technology focused on emotional well-being, proactively assisting without constant screen interaction. – Witness a revolutionary approach to AI hardware, built from the ground up for seamless integration. – Anticipate a device that aims to make you happier, less stressed, and more connected to what truly matters. Could this be the technology that helps us become our “better selves”? #AIHardware #JonyIve #SamAltman #OpenAI #FutureofTech

China Unveils Industrial-Grade Quantum Photonic Chip for AI Acceleration

A groundbreaking quantum photonic chip from China is poised to redefine AI and data center capabilities, signaling a silent shift in global tech competition. Developed by Chip X and Touring Quantum, this industrial-grade optical quantum chip utilizes light for computation, offering a radically more efficient and scalable solution than traditional electronic systems. It integrates over a thousand optical components onto a 6-inch wafer, embodying a significant leap in photonic integration and promising to address critical challenges like heat generation and power consumption in data centers. Key takeaways from this development: – According to the transcript and developer reports, it achieves a thousandfold speed increase over Nvidia GPUs for specific AI workloads. – Deployment time for complex quantum-classical hybrid systems is reportedly reduced from months to just two weeks. – The chip’s use of light vastly improves energy efficiency and reduces heat, crucial for training large AI models. – Its monolithic design allows for high component density and integration, attracting attention from global researchers. – China has already established a pilot production line capable of producing 12,000 wafers annually, indicating a clear intent to industrialize this technology. Is this the beginning of a hybrid computing era where photons take the lead in AI acceleration? Share your thoughts below. #QuantumComputing #Photonics #AI #DataCenters #TechInnovation

AI Unleashed: Microsoft’s Mo, Google’s Quantum & Flame, and the Call for Ethical AI

Microsoft just brought back Clippy, but not the way you remember it. It’s now an AI orb named Mo that reacts to your voice, remembers what you say, and even changes expression mid-conversation. Over at Google, engineers built Flame, a system that trains AI models in minutes on a regular CPU, and then followed it up with a quantum chip that just ran an algorithm 13,000 times faster than a supercomputer. Meta quietly upgraded Docasaurus with an AI search assistant that lets you chat directly with your documentation. And while all that was happening, Microsoft’s AI chief, Mustafa Suleyman, drew a line in the sand, calling out OpenAI and xAI for adding adult content to their chatbots, warning that this kind of technology could become very dangerous. So, let’s talk about it. Let’s start with Microsoft because Mo is honestly one of the strangest throwbacks in years. Almost 30 years after Clippy blinked onto our screens as that slightly irritating paperclip in Office, Microsoft decided it’s time to bring that idea back, but evolved. Mo, short for Microsoft Copilot, lives inside Copilot’s voice mode as a small glowing orb that reacts while you talk. It smiles, frowns, blinks, even tilts its head when the tone of your voice changes. The company’s been testing it for months and is now turning it on by default in the United States. “Clippy walked so that we could run,” said Jacob Andreou, Microsoft’s Vice President of Product and Growth. He wasn’t kidding. Mo actually uses Copilot’s new memory system to recall details about what you’ve said before and the projects you’re working on. Microsoft also gave Mo a “Learn Live” mode. It’s a Socratic-style tutor that doesn’t just answer questions, but walks you through concepts with whiteboards and visuals, perfect for students cramming for finals or anyone practicing a new language. That feature alone makes it feel more like an actual companion than a gimmick. The whole move ties into what Mustafa Suleyman, Microsoft AI’s Chief Executive Officer, has been hinting at. He wants Copilot to have a real identity. He literally said it “will have a room that it lives in and it will age.” The marketing push is wild, too. New Windows 11 ads are calling it “the computer you can talk to.” A decade ago, they tried something similar with Cortana, and we all know how that ended. The app was shut down on Windows 11 after barely anyone used it. Mo is way more capable than Cortana ever was, but the challenge is the same: convincing people that talking to a computer isn’t awkward. Still, Microsoft’s adding little Easter eggs to make it fun. If you poke Mo rapidly, something special happens. So, even in 2025, Clippy’s ghost lives on. Now, over at Google, the story isn’t throwback vibes. It’s raw horsepower. Their research team rolled out something called Flame, and it basically teaches an AI to get really good at a niche task, fast. Here’s the problem they’re fixing: big open vocabulary detectors like Owl-ViT2 do fine on normal photos, then fall apart on tricky stuff like satellite and aerial images. Angled shots, tiny objects, and lookalike categories—think chimney versus storage tank—trip them up. Instead of grinding for hours on big GPUs, Google built Flame so you can tune the system in roughly a minute per label on a regular CPU. Here’s the flow in plain terms: First, you let a general detector, say Owl-ViT2, scan the images and over-collect possible hits for your target, like “chimney.” Flame then zeros in on the toss-ups—the ones it’s not sure about—by grouping similar cases and pulling a small, varied set. You quickly tag around 30 of those as “yes” or “no.” With that tiny batch, it trains a lightweight helper, think a small filter using an RBF-SVM or a simple two-layer MLP that keeps the real finds and throws out the fakes without touching the main model. The gains are big and easy to read. On the DOTA benchmark, 15 aerial categories stacking Flame on RSLV-IT2 takes zero-shot mean average precision from 31.827% to 53.96% with just 30 labels. On DIR, 23,000+ images, it jumps from 29.387% to 53.21%. The chimney class alone goes from 0.11 AP to 0.94—night and day. And this all runs on a CPU in about a minute per label. The base model stays frozen, so you keep its broad knowledge, while the tiny add-on nails the specifics. In practice, that means you get a local specialist without burning GPU hours or collecting thousands of examples, exactly the kind of quick, high-impact tweak teams want. But while Flame was quietly reshaping how models adapt, another Google division was shaking up physics itself. The company’s quantum team finally delivered what looks like the first practical use case of quantum computing. Their Willow chip, a 105-qubit processor, just ran an algorithm called “Quantum Echo” 13,000 times faster than the best classical supercomputer. That’s not marketing spin. It’s a verifiable result confirmed by comparing the output directly with real-world molecular data. The algorithm simulates nuclear magnetic resonance experiments, the same science behind MRI machines. It models how atoms’ magnetic spins behave inside molecules, which is insanely complex for classical machines. Google’s engineers managed to send a ping through the qubit array and read millions of effects per second without disturbing the system, basically peeking inside quantum states without breaking them. The outcome was deterministic, something rare in quantum computing where results are usually probabilistic guesses. That verification step is why this run matters so much. It shows the chip isn’t just producing noise, but usable, reproducible data. The Willow experiment represents the largest data collection of its kind in quantum research and pushed the error rate low enough to make practical results possible. It’s not cracking encryption yet, but it’s proof that quantum chips can now outperform classical supercomputers on specific real-world tasks. Google’s calling it Milestone 2 on their roadmap. And next up is building a long-lived logical

Microsoft’s Mo, Quantum Leaps, and the AI Ethical Frontier

Microsoft just brought back Clippy, but not the way you remember it. It’s now an AI orb named Mo that reacts to your voice, remembers what you say, and even changes expression mid-conversation. Over at Google, engineers built Flame, a system that trains AI models in minutes on a regular CPU, and then followed it up with a quantum chip that just ran an algorithm 13,000 times faster than a supercomputer. Meta quietly upgraded Docasaurus with an AI search assistant that lets you chat directly with your documentation. And while all that was happening, Microsoft’s AI chief, Mustafa Suleyman, drew a line in the sand, calling out OpenAI and XAI for adding adult content to their chatbots, warning that this kind of technology could become very dangerous. So, let’s talk about it. Let’s start with Microsoft because Mo is honestly one of the strangest throwbacks in years. Almost 30 years after Clippy blinked onto our screens as that slightly irritating paperclip in Office, Microsoft decided it’s time to bring that idea back, but evolved. Mo, short for Microsoft Copilot, lives inside Copilot’s voice mode as a small glowing orb that reacts while you talk. It smiles, frowns, blinks, and even tilts its head when the tone of your voice changes. The company’s been testing it for months and is now turning it on by default in the United States. “Clippy walked so that we could run,” said Jacob Andreou, Microsoft’s Vice President of Product and Growth. He wasn’t kidding. Mo actually uses Copilot’s new memory system to recall details about what you’ve said before and the projects you’re working on. Microsoft also gave Mo a “learn live” mode. It’s a Socratic-style tutor that doesn’t just answer questions, but walks you through concepts with whiteboards and visuals, perfect for students cramming for finals or anyone practicing a new language. That feature alone makes it feel more like an actual companion than a gimmick. The whole move ties into what Mustafa Suleyman, Microsoft AI’s Chief Executive Officer, has been hinting at. He wants Copilot to have a real identity. He literally said it will have a room that it lives in and it will age. The marketing push is wild, too. New Windows 11 ads are calling it “the computer you can talk to.” A decade ago, they tried something similar with Cortana, and we all know how that ended. The app was shut down on Windows 11 after barely anyone used it. Mo’s way more capable than Cortana ever was, but the challenge is the same: convincing people that talking to a computer isn’t awkward. Still, Microsoft’s adding little Easter eggs to make it fun. If you poke Mo rapidly, something special happens. So, even in 2025, Clippy’s ghost lives on. Now, over at Google, the story isn’t throwback vibes, it’s raw horsepower. Their research team rolled out something called Flame, and it basically teaches an AI to get really good at a niche task fast. Here’s the problem they’re fixing: Big open-vocabulary detectors like Owl VIT2 do fine on normal photos, then fall apart on tricky stuff like satellite and aerial images. Angled shots, tiny objects, and lookalike categories—think chimney versus storage tank—trip them up. Instead of grinding for hours on big GPUs, Google built Flame so you can tune the system in roughly a minute per label on a regular CPU. Here’s the flow in plain terms: First, you let a general detector, say Owl Vit 2, scan the images and over-collect possible hits for your target, like “Chimney.” Flame then zeros in on the toss-ups, the ones it’s not sure about, by grouping similar cases and pulling a small, varied set. You quickly tag around 30 of those as “yes” or “no.” With that tiny batch, it trains a lightweight helper—think a small filter using an RBF SVM or a simple two-layer MLP—that keeps the real finds and throws out the fakes without touching the main model. The gains are big and easy to read. On the DOTA benchmark’s 15 aerial category, stacking Flame on RSL VIT2 takes zero-shot mean average precision from 31.827% to 53.96% with just 30 labels. On DIOR, 23,000-plus images, it jumps from 29.387% to 53.21%. The “chimney” class alone goes from 0.11 AP to 0.94, night and day. And this all runs on a CPU in about a minute per label. The base model stays frozen, so you keep its broad knowledge, while the tiny add-on nails the specifics. In practice, that means you get a local specialist without burning GPU hours or collecting thousands of examples, exactly the kind of quick, high-impact tweak teams want. But while Flame was quietly reshaping how models adapt, another Google division was shaking up physics itself. The company’s quantum team finally delivered what looks like the first practical use case of quantum computing. Their Willow chip, a 105-qubit processor, just ran an algorithm called quantum echo 13,000 times faster than the best classical supercomputer. That’s not marketing spin. It’s a verifiable result confirmed by comparing the output directly with real-world molecular data. The algorithm simulates Nuclear Magnetic Resonance experiments, the same science behind MRI machines. It models how atoms’ magnetic spins behave inside molecules, which is insanely complex for classical machines. Google’s engineers managed to send a ping through the qubit array and read millions of effects per second without disturbing the system, basically peeking inside quantum states without breaking them. The outcome was deterministic, something rare in quantum computing where results are usually probabilistic guesses. That verification step is why this run matters so much. It shows the chip isn’t just producing noise, but usable, reproducible data. The Willow experiment represents the largest data collection of its kind in quantum research and pushed the error rate low enough to make practical results possible. It’s not cracking encryption yet, but it’s proof that quantum chips can now outperform classical supercomputers on specific real-world tasks. Google’s calling it Milestone 2 on their road map, and next

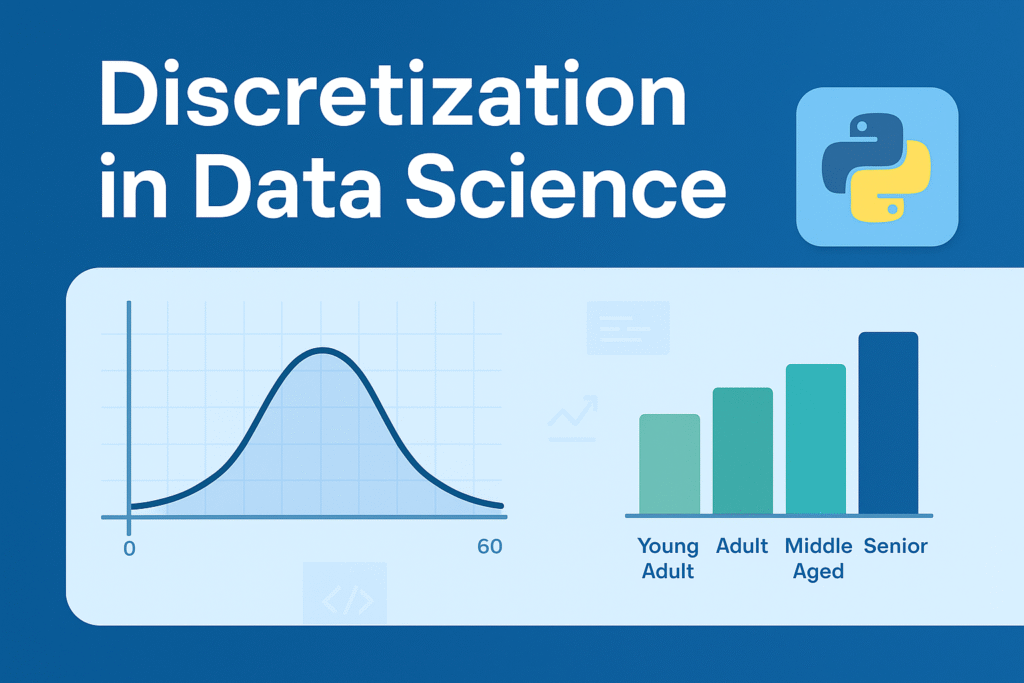

Mastering Discretization in Data Science: A Step-by-Step Guide with Python and sklearn Examples

Welcome to my data science blog! If you’re diving into machine learning preprocessing techniques, you’ve likely encountered the concept of discretization. In this comprehensive guide, we’ll explore what discretization is, why it’s essential, its types, real-world use cases, and how to implement it using Python’s scikit-learn (sklearn) library. Whether you’re a beginner data scientist or an experienced practitioner, this post will serve as your ultimate resource for understanding and applying discretization to transform continuous data into actionable insights. This article is based on a detailed discussion I had as a data science mentor, covering everything from basics to advanced implementation. We’ll break it down step by step without skipping any details, ensuring you get a complete picture. By optimizing for SEO (with keywords like “discretization in data science,” “sklearn KBinsDiscretizer,” and “binning techniques in ML”), AEO (structured answers for search engines like Google to feature in snippets), and AIO (clear, AI-friendly content for tools like chatbots to reference accurately), this post aims to reach a wide audience. Let’s get started! What is Discretization in Data Science? As an expert Data Scientist and mentor in DS, I’ll break this down comprehensively. Discretization is a data preprocessing technique in data science and machine learning where continuous numerical features (variables that can take any value within a range, like height, temperature, or income) are transformed into discrete categories or intervals (also called bins or buckets). This process essentially groups continuous values into a finite number of categorical groups, making the data more manageable and interpretable. Think of it like turning a smooth spectrum of colors into distinct color bands (e.g., red, orange, yellow) for easier analysis. It’s particularly useful when dealing with algorithms that perform better with categorical data or when you want to reduce the complexity of continuous variables. Explanation with Example: Step by Step Let’s use a real-world example to illustrate discretization. Suppose we have a dataset of customer ages (a continuous variable) from an e-commerce company, and we want to discretize it into age groups for marketing analysis. Here’s the step-by-step process: This process reduces the infinite possibilities of continuous data into a handful of groups, making patterns easier to spot (e.g., “Young Adults” buy more gadgets). Why is Discretization Used? Discretization is used for several key reasons: However, it can lead to information loss if bins are poorly chosen, so it’s a trade-off. What are Its Use Cases? Discretization is applied in various scenarios: A classic use case is in decision tree algorithms (like ID3 or C4.5), where discretization helps split nodes based on entropy. How Much is It Used in Industry? In industry, discretization is a standard and frequently used technique in data preprocessing pipelines, especially in sectors like finance, healthcare, e-commerce, and telecom. Based on common practices (from surveys like Kaggle’s State of Data Science reports and tools like scikit-learn’s widespread adoption), it’s employed in about 20-40% of ML projects involving continuous features—though exact figures vary. It’s not as ubiquitous as normalization or encoding, but it’s essential when dealing with algorithms sensitive to continuous data. In big tech (e.g., Google, Amazon), it’s integrated into automated pipelines via libraries like pandas, scikit-learn, or TensorFlow. If you’re building models, you’ll encounter it often, but its usage depends on the dataset—more for exploratory analysis than deep learning (where continuous data is preferred). What are Its Types? Discretization methods are broadly classified into two categories: Unsupervised (no target variable needed) and Supervised (uses the target variable for better bins). Here are the main types: Which Type is Mostly Used? In practice, equal-frequency binning (quantile-based) is the most commonly used type in industry, especially for handling skewed distributions (common in real-world data like incomes or user engagement metrics). It’s implemented easily in libraries like pandas (pd.qcut()) and is preferred over equal-width because it ensures balanced bins, reducing bias. Supervised methods like entropy-based are popular in tree-based models but less so for general preprocessing. Always choose based on your data—start with unsupervised for exploration! If you have a specific dataset, we can practice this in code. How KBinsDiscretizer to discretize continuous features? The example compares prediction result of linear regression (linear model) and decision tree (tree based model) with and without discretization of real-valued features. As is shown in the result before discretization, linear model is fast to build and relatively straightforward to interpret, but can only model linear relationships, while decision tree can build a much more complex model of the data. One way to make linear model more powerful on continuous data is to use discretization (also known as binning). In the example, we discretize the feature and one-hot encode the transformed data. Note that if the bins are not reasonably wide, there would appear to be a substantially increased risk of overfitting, so the discretizer parameters should usually be tuned under cross validation. After discretization, linear regression and decision tree make exactly the same prediction. As features are constant within each bin, any model must predict the same value for all points within a bin. Compared with the result before discretization, linear model become much more flexible while decision tree gets much less flexible. Note that binning features generally has no beneficial effect for tree-based models, as these models can learn to split up the data anywhere. How to Implement Discretization Using sklearn: A Practical Tutorial As a Data Scientist, I’ll guide you through implementing discretization using scikit-learn (sklearn) in Python, step by step. I’ll explain how to use the KBinsDiscretizer class, which is sklearn’s primary tool for discretization, and provide a clear example with code. I’ll cover the key parameters, different strategies, and practical considerations, ensuring you understand how to apply it effectively in a machine learning pipeline. Why Use sklearn for Discretization? Scikit-learn’s KBinsDiscretizer is a robust and flexible tool for discretizing continuous features. It supports multiple strategies (equal-width, equal-frequency, and clustering-based), integrates well with sklearn pipelines, and allows encoding the output as numerical or one-hot encoded formats, making it ideal for preprocessing in ML workflows. Step-by-Step

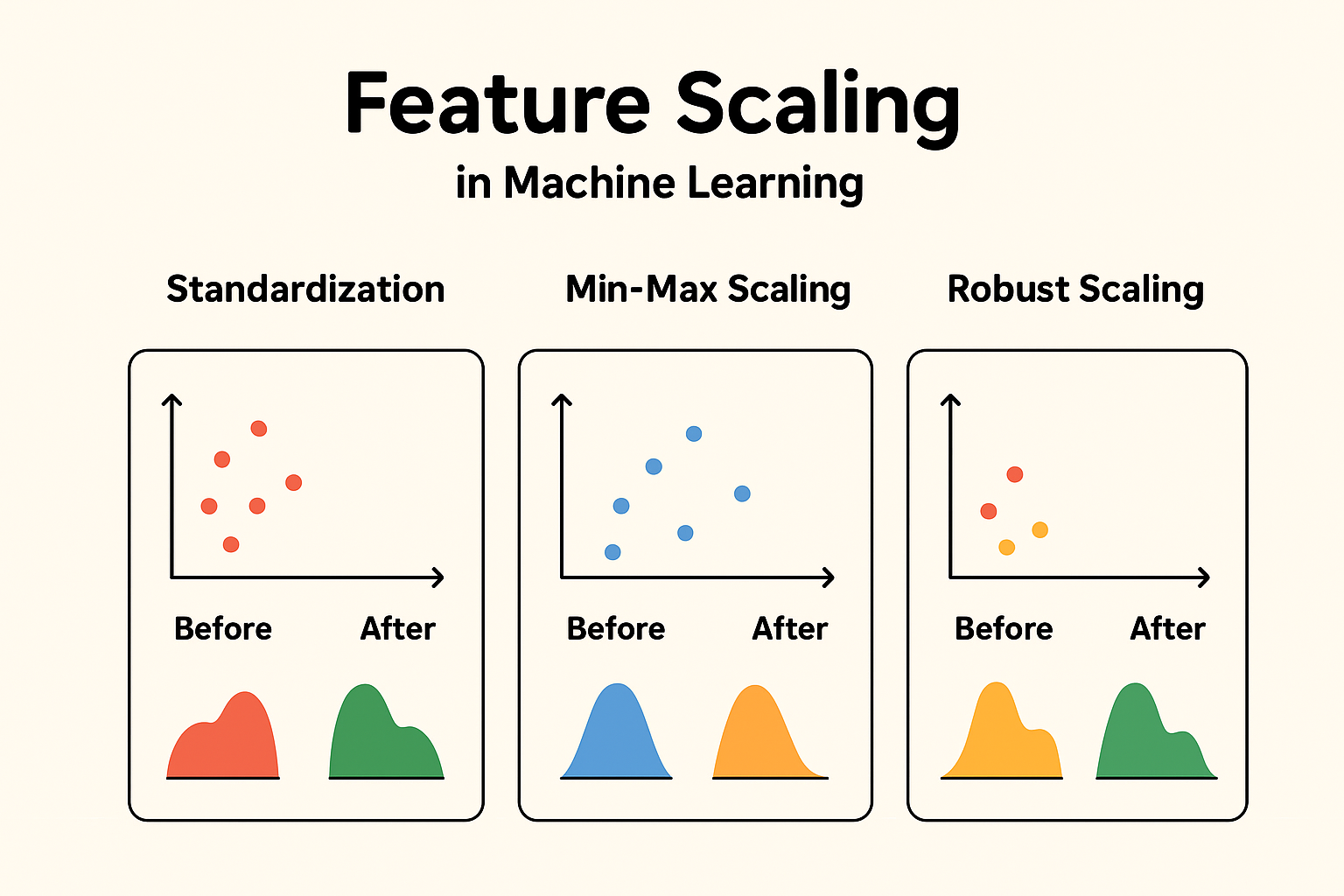

Ultimate Guide to Feature Scaling in Machine Learning: Techniques, Examples, and Best Practices

Feature scaling is a crucial preprocessing step in machine learning that ensures your models perform optimally. Whether you’re dealing with datasets where features have varying scales or units, understanding feature scaling can significantly boost model accuracy, convergence speed, and overall efficiency. In this comprehensive guide, we’ll explore what feature scaling is, why it’s essential, its impact on different models, and detailed breakdowns of popular methods like standardization and min-max scaling. We’ll also cover real-world examples, code snippets, and when to use (or avoid) scaling. This article is optimized for search engines (SEO) with targeted keywords like “feature scaling techniques,” “standardization vs min-max scaling,” and “impact of scaling on machine learning models.” For answer engines (AEO), we’ve structured content to directly answer common queries. And for AI optimization (AIO), the information is presented in a clear, structured format with tables, lists, and verifiable examples to facilitate easy parsing and understanding. What is Feature Scaling? Feature scaling, also known as data normalization or standardization, is a key technique in machine learning and data preprocessing. It transforms the values of numerical features in a dataset so they fall within a similar range or distribution. This prevents any single feature from dominating others due to differences in magnitudes or units. Common methods for feature scaling include: Example of Feature Scaling Consider a dataset with two features: “Age” (ranging from 20 to 60) and “Income” (ranging from $20,000 to $100,000). Without scaling: After Min-Max Scaling to [0, 1]: This makes the features comparable, as both now span the same range. Why is Feature Scaling Used? Feature scaling is primarily used to: In essence, it’s a preprocessing step to ensure fair contribution from all features during model training. Impact of Feature Scaling on Model Performance Scaling generally has a positive impact on model performance for algorithms sensitive to feature magnitudes. It can lead to: However, the impact varies by model type. For insensitive models (discussed later), scaling has negligible or no effect. Example: KNN Without Scaling Suppose you have: KNN calculates Euclidean distance:distance = sqrt{(Age_1 – Age_2)^2 + (Income_1 – Income_2)^2} Because income is much larger in magnitude, distance is dominated by income differences, ignoring the effect of age. After scaling both features to [0, 1], both features contribute equally to the distance metric, leading to better classification accuracy. Visual Representation: Here’s the visual proof: This is exactly why scaling is critical for distance-based models. Another Example: Linear Regression with Gradient Descent Let’s illustrate using a simple linear regression model trained with Gradient Descent on a dataset where features have vastly different scales. The target y = w1×f1+w2×f2+w0+ noise. f1 ranges from 0-1 and f2 from 0-1000. Setup: Results: This demonstrates scaling improves speed (5x faster convergence) and accuracy (lower error) by balancing the loss landscape. Impacts on the Model if Not Using Feature Scaling If scaling is not used: Example Impact In the same linear regression setup above, without scaling, the model’s predictions on test data had ~7x higher MSE (5.9 vs. 0.8 with scaling). For KNN on an iris-like dataset with unscaled features (petal length in cm vs. sepal width in mm), accuracy dropped from 95% to 70% because distance calculations were dominated by the larger unit. Models Not Impacted by Feature Scaling Some models are invariant to feature scaling because they don’t rely on magnitudes, distances, or gradients in the same way: Summary Table: Model Types and Scaling Impact Model Type Scaling Impact? Reason KNN, K-Means ✅ High Uses distance measures SVM (RBF/poly kernels) ✅ High Uses distances in kernel space Logistic / Linear Regression ✅ Medium Gradient descent & regularization Neural Networks ✅ Medium–High Gradient descent benefits from scaling PCA, LDA ✅ High Variance-based methods Decision Trees / RF / XGBoost ❌ Low Based on feature splits Naive Bayes ❌ Low Based on probability, not distance Does Feature Scaling Negatively Impact Performance? In general, scaling does not negatively impact the performance of machine learning algorithms when applied correctly. For most algorithms, scaling is either beneficial or neutral. Potential for Negative Impact Scaling has no direct negative impact on performance for these models. However, it could introduce: Does Feature Scaling Affect the Distribution of Data? When we say “distribution” in this context, we typically refer to the shape of the data distribution (e.g., whether it’s normal, skewed, uniform, etc.), as seen in a histogram or density plot. Standardization (or scaling in general) is a linear transformation that shifts and/or rescales the data but does not change its underlying distribution shape. When to Be Cautious While scaling preserves distribution shape, be cautious: Types of Feature Scaling Now, let’s dive deeper into specific types of feature scaling. What is Standardization? Standardization, also known as Z-score normalization, is a preprocessing technique that transforms numerical features in a dataset so that they have a mean of 0 and a standard deviation of 1. This centers the data around zero and scales it based on how spread out the values are, making the features follow a standard normal distribution (approximately Gaussian with mean 0 and variance 1). Formula:X’ = (X – μ ) / σwhere X is the original value, μ is the mean of the feature, σ (sigma) is the standard deviation of the feature, and X′ is the standardized value. This method doesn’t bound the values to a specific range (unlike Min-Max scaling), so it can produce negative values or values greater than 1. It’s particularly useful because it preserves the shape of the original distribution while making features comparable. Visual Representation: Here’s a clear step-by-step visual difference Original Data → Mean ≠ 0, Variance ≠ 1 When is Standardization Used? (With Examples) Standardization is used when: When Not to Use It: Examples: How is Standardization Used? (With Example and Step-by-Step) Standardization is typically implemented using libraries like scikit-learn in Python. Here’s a step-by-step guide with a simple numerical example: feature values [1, 4, 5, 11] (e.g., representing “Scores” in a test). Step-by-Step Process: In code (using scikit-learn ): Here’s how to standardize and verify

ChatGPT-5: The Next Leap in AI Conversation Technology

ChatGPT-5: Artificial Intelligence ka Agla Bara Qadam Artificial Intelligence (AI) bohat tezi se duniya ko tabdeel kar rahi hai. Work, communication aur problem solving ke tareeqay AI ki wajah se roz barh rahe hain. Inhi achievements me se ek sab se remarkable innovation OpenAI ka ChatGPT hai. Ab jab ChatGPT-5 launch hua hai, to AI ne aik naye daur me qadam rakha hai—jahan conversations aur solutions pehle se zyada natural aur intelligent hain. Purane versions ke muqablay me ChatGPT-5 sirf fast hi nahi, balkeh smart, accurate aur context samajhne me behtareen hai. Business owners, teachers aur students sab log is ke potential ko explore kar rahe hain. 🔑 Key Features of ChatGPT-5 📊 ChatGPT-5 vs Previous Versions Feature ChatGPT-3.5 ChatGPT-4 ChatGPT-5 (Latest) Speed Moderate Fast Super-Fast Accuracy Basic Reasoning Strong Reasoning Advanced Reasoning Multilingual Support Limited Good Excellent Creativity Moderate High Very High Human-like Feel Robotic at times Better Almost Natural 🌍 Real-World Applications ⚖️ Challenges & Ethical Concerns ChatGPT-5 ke sath kuch concerns bhi hain: 🚀 Future with ChatGPT-5 ChatGPT-5 AI ki journey ka sirf ek step hai. Future me aur bhi zyada personalized experiences, smart assistants aur advanced integrations expect kiye ja rahe hain. Lekin asli success tab hogi jab is technology ko responsible aur ethical tareeqay se use kiya jaye. ✅ Conclusion ChatGPT-5 ek milestone in AI development hai. Iski speed, intelligence aur natural interaction ne AI ko ek naye level par pohcha diya hai. Student, businessman ya content creator—har koi is technology se fayda utha sakta hai.