Microsoft just brought back Clippy, but not the way you remember it. It’s now an AI orb named Mo that reacts to your voice, remembers what you say, and even changes expression mid-conversation. Over at Google, engineers built Flame, a system that trains AI models in minutes on a regular CPU, and then followed it up with a quantum chip that just ran an algorithm 13,000 times faster than a supercomputer. Meta quietly upgraded Docasaurus with an AI search assistant that lets you chat directly with your documentation. And while all that was happening, Microsoft’s AI chief, Mustafa Suleyman, drew a line in the sand, calling out OpenAI and xAI for adding adult content to their chatbots, warning that this kind of technology could become very dangerous. So, let’s talk about it.

Let’s start with Microsoft because Mo is honestly one of the strangest throwbacks in years. Almost 30 years after Clippy blinked onto our screens as that slightly irritating paperclip in Office, Microsoft decided it’s time to bring that idea back, but evolved. Mo, short for Microsoft Copilot, lives inside Copilot’s voice mode as a small glowing orb that reacts while you talk. It smiles, frowns, blinks, even tilts its head when the tone of your voice changes. The company’s been testing it for months and is now turning it on by default in the United States. “Clippy walked so that we could run,” said Jacob Andreou, Microsoft’s Vice President of Product and Growth. He wasn’t kidding. Mo actually uses Copilot’s new memory system to recall details about what you’ve said before and the projects you’re working on. Microsoft also gave Mo a “Learn Live” mode. It’s a Socratic-style tutor that doesn’t just answer questions, but walks you through concepts with whiteboards and visuals, perfect for students cramming for finals or anyone practicing a new language. That feature alone makes it feel more like an actual companion than a gimmick. The whole move ties into what Mustafa Suleyman, Microsoft AI’s Chief Executive Officer, has been hinting at. He wants Copilot to have a real identity. He literally said it “will have a room that it lives in and it will age.”

The marketing push is wild, too. New Windows 11 ads are calling it “the computer you can talk to.” A decade ago, they tried something similar with Cortana, and we all know how that ended. The app was shut down on Windows 11 after barely anyone used it. Mo is way more capable than Cortana ever was, but the challenge is the same: convincing people that talking to a computer isn’t awkward. Still, Microsoft’s adding little Easter eggs to make it fun. If you poke Mo rapidly, something special happens. So, even in 2025, Clippy’s ghost lives on.

Now, over at Google, the story isn’t throwback vibes. It’s raw horsepower. Their research team rolled out something called Flame, and it basically teaches an AI to get really good at a niche task, fast. Here’s the problem they’re fixing: big open vocabulary detectors like Owl-ViT2 do fine on normal photos, then fall apart on tricky stuff like satellite and aerial images. Angled shots, tiny objects, and lookalike categories—think chimney versus storage tank—trip them up. Instead of grinding for hours on big GPUs, Google built Flame so you can tune the system in roughly a minute per label on a regular CPU. Here’s the flow in plain terms: First, you let a general detector, say Owl-ViT2, scan the images and over-collect possible hits for your target, like “chimney.” Flame then zeros in on the toss-ups—the ones it’s not sure about—by grouping similar cases and pulling a small, varied set. You quickly tag around 30 of those as “yes” or “no.” With that tiny batch, it trains a lightweight helper, think a small filter using an RBF-SVM or a simple two-layer MLP that keeps the real finds and throws out the fakes without touching the main model.

The gains are big and easy to read. On the DOTA benchmark, 15 aerial categories stacking Flame on RSLV-IT2 takes zero-shot mean average precision from 31.827% to 53.96% with just 30 labels. On DIR, 23,000+ images, it jumps from 29.387% to 53.21%. The chimney class alone goes from 0.11 AP to 0.94—night and day. And this all runs on a CPU in about a minute per label. The base model stays frozen, so you keep its broad knowledge, while the tiny add-on nails the specifics. In practice, that means you get a local specialist without burning GPU hours or collecting thousands of examples, exactly the kind of quick, high-impact tweak teams want.

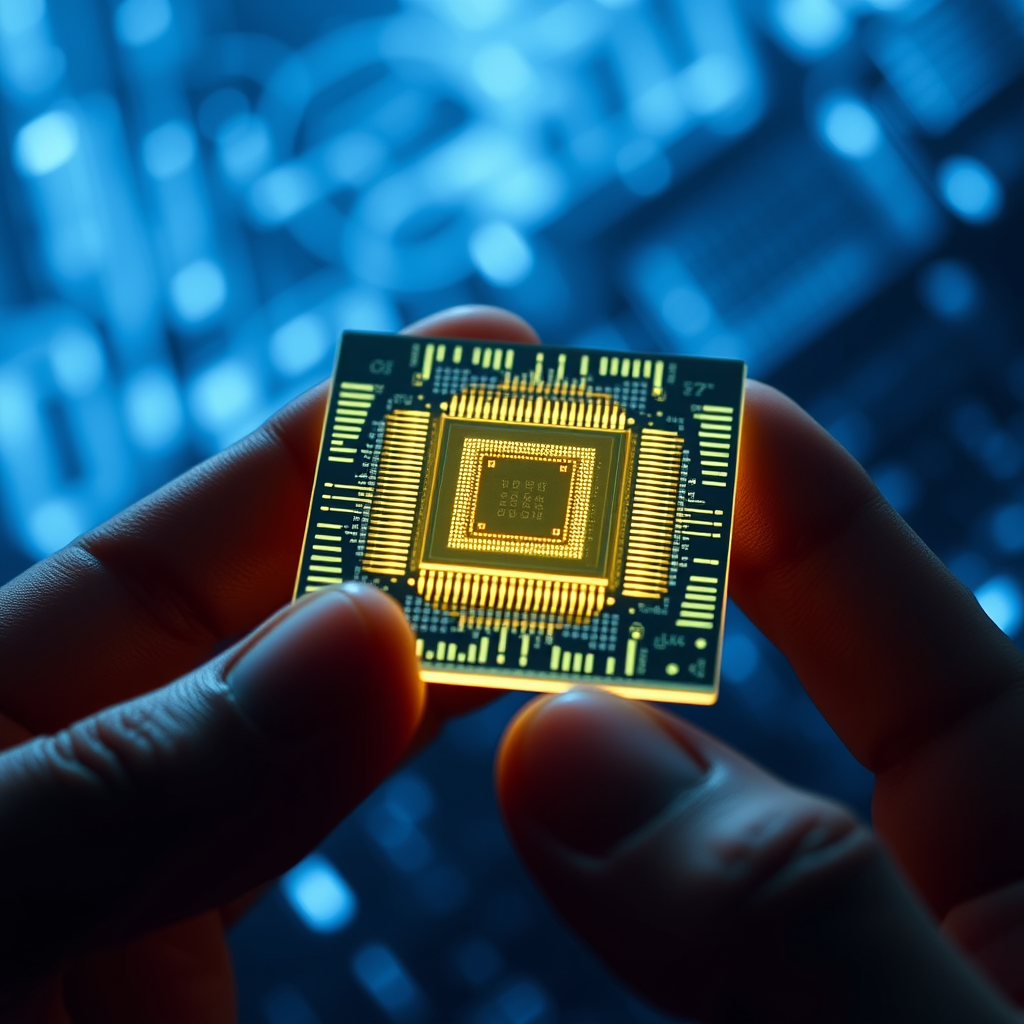

But while Flame was quietly reshaping how models adapt, another Google division was shaking up physics itself. The company’s quantum team finally delivered what looks like the first practical use case of quantum computing. Their Willow chip, a 105-qubit processor, just ran an algorithm called “Quantum Echo” 13,000 times faster than the best classical supercomputer. That’s not marketing spin. It’s a verifiable result confirmed by comparing the output directly with real-world molecular data. The algorithm simulates nuclear magnetic resonance experiments, the same science behind MRI machines. It models how atoms’ magnetic spins behave inside molecules, which is insanely complex for classical machines. Google’s engineers managed to send a ping through the qubit array and read millions of effects per second without disturbing the system, basically peeking inside quantum states without breaking them. The outcome was deterministic, something rare in quantum computing where results are usually probabilistic guesses. That verification step is why this run matters so much. It shows the chip isn’t just producing noise, but usable, reproducible data. The Willow experiment represents the largest data collection of its kind in quantum research and pushed the error rate low enough to make practical results possible. It’s not cracking encryption yet, but it’s proof that quantum chips can now outperform classical supercomputers on specific real-world tasks. Google’s calling it Milestone 2 on their roadmap. And next up is building a long-lived logical qubit, basically a building block for fault-tolerant quantum computers. For decades, scientists have been saying practical quantum computing is just a few years away. This might be the first time it’s actually true.

Meanwhile, over at Meta, something less dramatic but still impactful dropped: Docasaurus 3.9. It’s Meta’s React-based static site generator, the thing behind countless open-source documentation sites. And the new version brings AI-powered search right into the docs. They’ve integrated Algolia’s DocSearch 4 with the new “Ask AI” feature, meaning you can now chat with your documentation. Instead of typing keywords, you just ask a question in natural language and the embedded assistant answers based on indexed pages. Projects still on Docasaurus 3 can upgrade incrementally by running a simple npm command: `npm update @docsearch/react`. The AI assistant becomes available as soon as you switch to version 4, which is nice for teams that want a smooth migration.

Docasaurus 3.9 also raises the Node.js minimum to version 20.0.0 and officially drops Node 18, which hit end-of-life anyway. Some developers complained it should count as a breaking change, but Meta insists it’s minor since Node 18 is no longer supported. Other upgrades focus on internationalization. Developers can now override base URLs per locale using `i18n.localeConfigs[locale]` enabling separate domains for different languages. There’s also a new `translate` flag, off by default, which speeds up builds for sites that don’t need translations. Sidebar items can now have explicit keys for better organization, and translation fixes were added for Brazilian Portuguese and Ukrainian. On the visual side, they added Mermaid ELK layout support for richer diagram rendering and switched to RSPA 1.5 under the hood for faster builds. Migration from 3.8 is almost effortless. Just verify your Node version and update the Algolia configuration if you’re enabling Ask AI. It’s the kind of quiet but solid release that keeps the open-source backbone of the web running smoothly.

And while Meta’s open-source team was rolling out AI for documentation, Microsoft’s AI chief Mustafa Suleyman was making headlines of a very different kind. Speaking at the Paley International Council Summit in California, he said Microsoft will not be creating chatbots capable of generating erotic or romantic content, calling those capabilities “very dangerous.” The timing is key because both Sam Altman’s OpenAI and Elon Musk’s xAI have been moving in that direction. Musk’s Grokbot already offers flirty companion modes, and Altman recently confirmed ChatGPT will soon allow verified adult users to generate explicit material under age-gated settings. Suleyman made it clear that Microsoft won’t follow that path. “That’s just not a service we’re going to provide. Other companies will build that.” His stance also shows how Microsoft and OpenAI are starting to drift apart. Despite Microsoft investing $13 billion into OpenAI since 2019, reports say OpenAI recently signed a $300 billion computing deal with Oracle, one of Microsoft’s biggest rivals. Microsoft, meanwhile, is pouring its own energy into Copilot, aiming for what Suleyman calls “human-centered AI.” He’s been openly skeptical of machine consciousness, warning that giving AI human-like behaviors makes regulation harder. This week, he doubled down, saying that “AI avatars and bot experiences risk normalizing dangerous behavior.” And honestly, he’s not the only one worried. Billionaire Mark Cuban said the move could backfire badly, predicting parents will ditch ChatGPT if kids can bypass the age verification. “This is going to backfire hard,” he wrote on X. Altman responded that OpenAI “isn’t the elected moral police of the world,” emphasizing adult freedom, but the debate keeps growing. Experts like Georgetown researcher Jessica Gu think OpenAI is in a tough spot. It sees consumer demand for erotic content, but also has to convince investors and regulators it’s building tools that benefit humanity. It’s that tension between market reality and moral responsibility that’s defining AI right now.

And that’s the download for today. What do you think? Should AI assistants start remembering us, teaching us, and even showing emotion? Or is that a step too far? Drop your thoughts in the comments. Make sure to subscribe and hit the like button if you enjoyed this one. And as always, thanks for watching. Catch you in the next one.