Ultimate Guide to Iterative Imputer and KNN Imputer in Machine Learning (with Manual Examples)

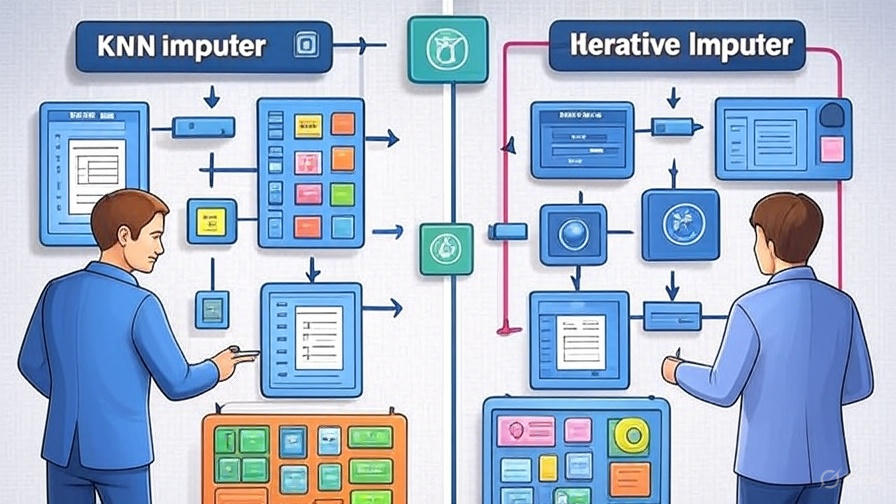

Handling missing data is a crucial step in any machine learning pipeline. Two powerful techniques for this are: In this blog, we’ll explore both methods from scratch, using easy-to-understand language, manual examples, and real-world use cases. Whether you’re a beginner or someone brushing up, this guide will give you the complete picture. Why Handling Missing Values Matters? Real-world datasets often have null or missing values in columns. If you skip this step: Thus, proper imputation (filling missing data) is critical. What Is Iterative Imputer? Iterative Imputer is a method to fill missing values in a dataset using Multivariate Imputation. It treats each feature with missing values as a regression problem, predicting the missing values based on the other features. In simple terms: “Instead of just filling missing values with a mean or median (which is naive), let’s learn what the missing value could have been by looking at patterns in the other columns.” Why is it used? It is used because: When and Where is it used? Use Iterative Imputer when: Don’t use it when: 3. How Iterative Imputer Works (Step-by-Step)? Let’s go through it step-by-step, first conceptually, then with a simple manual numerical example, and finally code. Steps: 4. Repeat this process for all columns with missing values. 5. Iterate steps 3–4 until the values converge or until a maximum number of iterations is reached. Result: You get a complete dataset, filled with more intelligent estimates than just mean or median. Dataset with missing values: A B C 1.0 2.0 3.0 2.0 NaN 6.0 3.0 6.0 NaN NaN 8.0 9.0 Step 1: Initialize missing values Let’s fill missing values with column means first: A B C 1.0 2.0 3.0 2.0 5.33 6.0 3.0 6.0 6.0 2.0 8.0 9.0 Step 2: Start Imputation Let’s say we want to impute B in row 2 (originally NaN). Train a simple linear regression on this. For row 2: A=2.0, C=6.0 → Predict B Assume regression model gives B = 5 So, we replace 5.33 with 5.0 (a better estimate based on regression). Impute column C Impute column A Iteration Cycle: This cycle (imputing B → C → A) is done for multiple iterations (by default 10 in scikit-learn), so the estimates keep improving each time. Each time: Important Notes: Concept Description Initial guess Usually mean/median imputation Predictive model By default BayesianRidge, but you can use any regressor (e.g., RandomForest) Each column treated as target One at a time, while using others as predictors Updated values used Yes, always the latest values are used in subsequent imputations Code Implementation: import numpy as npimport pandas as pdfrom sklearn.experimental import enable_iterative_imputerfrom sklearn.impute import IterativeImputerfrom sklearn.linear_model import BayesianRidge # Sample data with missing valuesdata = pd.DataFrame({‘A’: [1, 2, np.nan, 4],‘B’: [2, np.nan, 6, 8],‘C’: [np.nan, 5, 6, 9],‘D’: [3, 4, 2, np.nan]})print(“Original Data:”)print(data) # Create IterativeImputerimputer = IterativeImputer(estimator=BayesianRidge(), max_iter=10, random_state=0) # estimator=BayesianRidge(), max_iter=10,; >> These parameters are used by default in scikit-learn # Fit and transformimputed_array = imputer.fit_transform(data) # Convert back to DataFrameimputed_data = pd.DataFrame(imputed_array, columns=data.columns)print(“\nImputed Data:”)print(imputed_data) What Is the Relationship Between Iterative Imputer and MICE? Iterative Imputer – The Tool Note: If we run max_iter = 10 for both techniques then Iterative Imputer give only one data set without missing values but MICE give 10 datasets without missing values. What Is KNN Imputer? KNN Imputer (K-Nearest Neighbors Imputer) fills in missing values by: Finding the K most similar (nearest) rows based on other feature values and then taking the average (or weighted average) of those neighbors to fill the missing value. It’s a non-parametric, instance-based imputation method. In simple terms: “It finds the most similar (nearest) rows based on other columns and uses their values to fill in the missing data.” When and Why to Use KNN Imputer? When: Avoid when: Step-by-Step Example (Manual Calculation): Row Feature1 Feature2 Feature3 A 1 2 3 B 2 NaN 4 C 3 6 NaN D 4 8 6 E NaN 10 7 We’ll use: How Distance is Calculated? For each row with a missing value: Step 1: Impute Feature2 for Row B Row B = [2, NaN, 4] We want to impute Feature2. We find distances excluding Feature2, so we use Feature1 and Feature3. Compare with rows that have Feature2 value: Row Feature1 Feature2 Feature3 A 1 2 3 C 3 6 NaN ❌ D 4 8 6 E NaN ❌ 10 7 Only A and D can be used (C has NaN in Feature3, E has NaN in Feature1) Distances from B = [2, NaN, 4] A = [1, 2, 3] → Features: 1 and 3 only Distance(B,A)=sqrt((2−1)^2+(4−3)^2) = sqrt(1+1)= sqrt(2) ≈ 1.41 D = [4, 8, 6] Distance(B,D)=sqrt((2−4)^2 + (4−6)^2) = sqrt(4+4) = sqrt(8) ≈ 2.83 2 Nearest Neighbors: A and D Their Feature2 values: 2 (A), 8 (D) Imputed Value for Feature2 (Row B): Mean=(2+8)/2=5 Row B → Feature2 = 5 Step 2: Impute Feature3 for Row C Row C = [3, 6, NaN] We’ll use Feature1 and Feature2. Compare with rows that have Feature3: Row Feature1 Feature2 Feature3 A 1 2 3 B 2 5 ✅ 4 D 4 8 6 E NaN ❌ 10 7 Valid rows: A, B, D Row C → Feature3 = 5 Step 3: Impute Feature1 for Row E Row E = [NaN, 10, 7] Use Feature2 and Feature3 to compute distance. Compare with rows having Feature1: Row Feature1 Feature2 Feature3 A 1 2 3 B 2 5 4 C 3 6 5 D 4 8 6 2 Nearest: D (4), C (3) Imputed Feature1: (4 + 3)/2 = 3.5 Row E → Feature1 = 3.5 Final Imputed Dataset Row Feature1 Feature2 Feature3 A 1 2 3 B 2 5 4 C 3 6 5 D 4 8 6 E 3.5 10 7 Imputation Strategy: Code Implementation: from sklearn.impute import KNNImputerimport pandas as pdimport numpy as np #Create the dataset data = {‘Feature1’: [1, 2, 3, 4, np.nan],‘Feature2’: [2, np.nan, 6, 8, 10],‘Feature3’: [3, 4, np.nan, 6, 7]}df